Table of Contents

Overview

Site operators now have greater control, allowing them to choose which AI crawlers can access their material and to set terms for how their content may be used by AI companies1. Also, AI companies will be able to specify when they want their crawlers to be used, either training or inference or search, and the owner of the websites can have a better understanding of what to base their decisions on.

Cloudflare is one of the largest networks in the world that runs and protects traffic of nearly 20 percent of all websites. In September 2024, the company introduced a one-click option to block the AI crawlers by the owners of websites. Since then, over one million customers have activated this setting, providing a straightforward and robust way to prevent scraping while they evaluate their approach to AI.

Building on this, Cloudflare is moving toward a permission-based system for AI crawler access. AI companies must now secure explicit approval from website owners before scraping content. When a new domain is registered with Cloudflare, owners are prompted to decide whether to permit AI crawlers, ensuring customers can make an informed choice about granting or denying access from the outset.

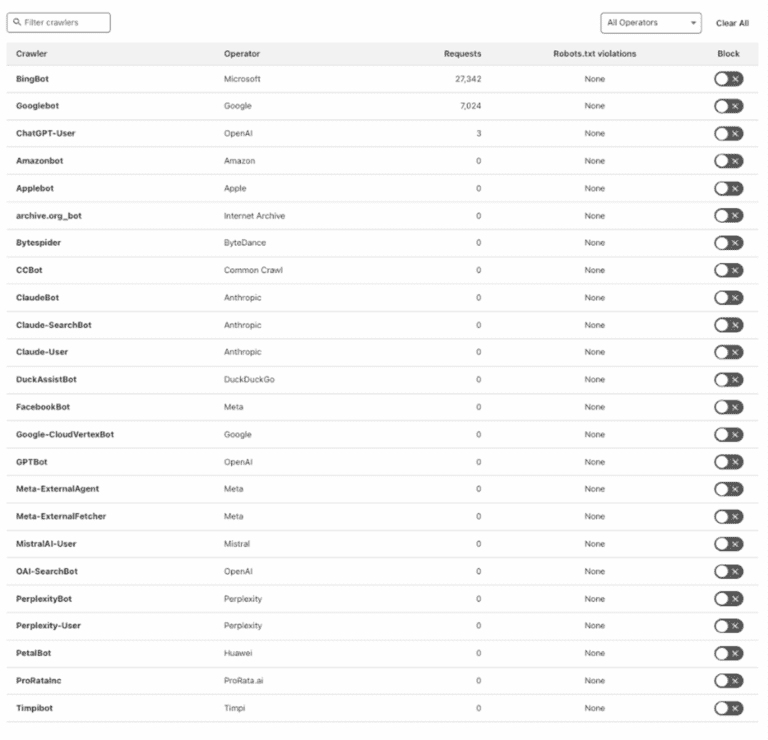

These are the current list crawlers which can be blocked:

Bot name | What it does |

Google-Extended | Googles AI Bot (not in the list above) |

Googlebot | Google Search Bot |

Bingbot | Used for Bing AI and Search |

Blocking Google Gemini

Currently, Google-Extended is not on Cloudflare’s default list of bots to block because it is not categorised as an AI crawler or bot in the same way as others like GPTBot or ClaudeBot. Cloudflare’s AI bot blocking feature targets bots specifically identified as being used for AI training or generative AI applications, and its managed rule does not include search engine crawlers or bots that are primarily used for search indexing.

Cloudflare does not block Google-Extended by default, since they are considered to be a part of search and AI services provided by Google, and their blocking might produce undesired consequences unless carefully controlled. Instead, Cloudflare recommends that if you want to block Google-Extended, you should do so explicitly in your robots.txt file, as this is the method Google recognises for opting out of content being used for AI training.

The bottom line is that it is your call to block AI crawlers based on your content strategy...

Should I Block AI Crawlers?

Blocking AI crawlers is a fairly sensible and increasingly common option, provided that your site contains proprietary material, confidential information, or you simply do not want your site information to be used to train AI models without your permission.

The bottom line is that it is your call to block AI crawlers based on your content strategy, business model, and risk tolerance to your data being used to train AI. Other publishers, particularly those who have unique or valuable content are opting to block AI crawlers to be on the safe side.

Key Reasons to Block AI Crawlers

- Prevent unauthorised use: Blocking helps stop your content from being ingested into large language models, which could later reproduce or rephrase your proprietary material.

- Protect intellectual property: If your website contains unique, copyrighted, or sensitive information, blocking AI crawlers can help safeguard your rights and reduce the risk of content misuse.

- Control over data usage: By blocking AI crawlers, you maintain more control over how and where your content appears, and you can negotiate licensing or data-sharing agreements on your terms.

- Crawls: They use a lot of website resources when they are crawling websites, and as the number of AI platforms grows, so does the number of bots

Key Reasons to Allow AI Crawlers

- Increased Online Visibility and Search Rankings: AI crawlers, especially those integrated with search engines like Google, can improve your site’s visibility in AI-powered search results. As AI-driven search experiences become more prominent, being accessible to these bots can help your content appear in new types of search summaries and featured results, potentially driving more organic traffic to your site.

Blocking AI crawlers such as OpenAI’s GPTBot or Google-Extended will affect your visibility in LLMs (it should not affect your site’s ranking in standard search engines, as these are separate from search indexing bots).

As AI search is a relatively new concept, there is a lack of information regarding conversion rates or website traffic loss. Ultimately, if people are searching using LLMs, you will want to be found. - Enhanced Thought Leadership and Brand Authority: When you permit AI bots to view your content, you are feeding into a bank of knowledge that drives applications such as ChatGPT and Google Gemini. This will help your organisation become a credible source and thought leader, and raise the chances of your brand being mentioned or suggested in AI-generated answers and summaries.

- Broader Content Reach and Influence: AI models are consulted by a rapidly growing audience. Allowing bots to crawl your site helps ensure your content reaches a wider range of platforms and users, extending your influence beyond traditional search engines.

- Emerging Monetisation Opportunities: New models (as we have seen from Cloudflare), such as “pay per crawl,” are being introduced, allowing content owners to charge AI crawlers for access. This could create new revenue streams for publishers and website owners who allow AI bots to access their content.

Conclusion

Cloudflare’s evolving approach to AI crawler management marks a significant shift in how website owners can protect and leverage their digital content. By introducing robust, permission-based controls, Cloudflare empowers site operators to make informed decisions tailored to their unique business goals and risk tolerance. Whether your priority is safeguarding intellectual property, controlling data usage, or maximising the visibility and influence of AI-driven search, the tools are now in your hands.

As we learn more and the goal posts move, for better or worse, it’s essential for website owners to regularly reassess their strategies regarding AI crawlers. Thoughtfully weighing the benefits of increased reach and potential monetisation against the risks of unauthorised use will ensure your content remains both protected and positioned for growth.

Thankfully, the choice to block or allow AI crawlers is no longer a technical hurdle; it’s a strategic decision, and Cloudflare’s latest updates make it easier than ever to act in your site’s best interest.